Google has made its Artificial Intelligence tool open-sourced technology and it can make portraits on its smartphone Pixel 2 possible.

Google Pixel 2 and Pixel 2 XL without a dual-rear camera can be used with a Portrait mode on the front and rear camera, driven by AI software known as “semantic image segmentation model” or “DeepLab-v3+” and implemented in TensorFlow.

According to a blog post by Liang-Chieh Chen and Yukun Zhu, Software Engineers of Google Research, “semantic image segmentation” stands for “assigning a semantic label, such as ‘road’, ‘sky’, ‘person’, ‘dog’, to every pixel in an image.”

Google team said the will power various new applications, including the the synthetic shallow depth-of-field effect in the portrait mode of the Pixel 2 and Pixel 2 XL smartphones.

Explaining the feature, Google engineers said when each pixel or subject in the image is assigned one of these labels, it automatically figures out the outline of the objects for the Portrait mode, whether the object is a flower or a dog or a person. Rest of the background remains blurred, creating a shallow depth of field.

“Assigning these semantic labels requires pinpointing the outline of objects, and thus imposes much stricter localization accuracy requirements than other visual entity recognition tasks such as image-level classification or bounding box-level detection,” said the team in its blog post.

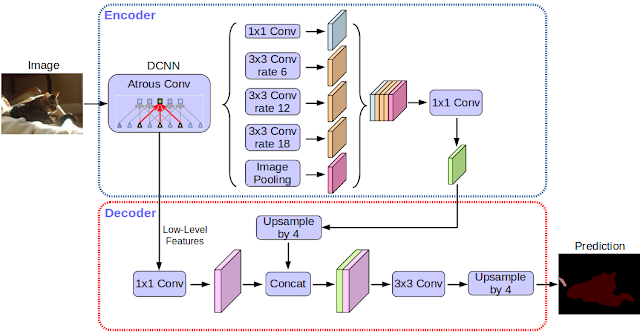

Other smartphone players like Apple or Samsung providing the same feature based on dual sensors but Google relies on the AI-driven technology to bring the same effect in Pixel 2 and Pixel 2 XL. The DeepLab-v3+ open source release includes “models built on top of a powerful convolutional neural network (CNN) backbone architecture for the most accurate results.”

As part of this release, the blog post said, “We are additionally sharing our Tensorflow model training and evaluation code, as well as models already pre-trained on the Pascal VOC 2012 and Cityscapes benchmark semantic segmentation tasks.”

The new image segmentation has been improved vividly over the last couple of years and the Google team hopes that it would help academics and the industry in their newer applications.